Dynamic: Airflow pipelines are configuration as code (Python), allowing for dynamic pipeline generation.Some of the features offered by Airflow are: Make code reviews, branch management, and issue triaging work the way you want.Īirflow and Github Actions can be primarily classified as "Workflow Manager" tools. Build, test, and deploy your code right from GitHub.

AIRFLOW KUBERNETES GITHUB SOFTWARE

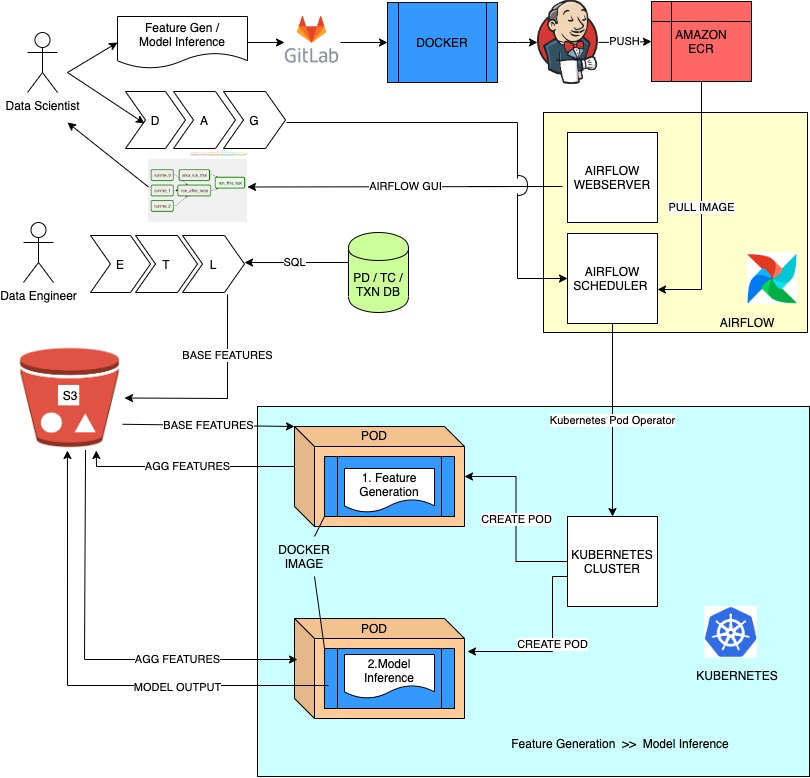

It makes it easy to automate all your software workflows, now with world-class CI/CD. The rich user interface makes it easy to visualize pipelines running in production, monitor progress and troubleshoot issues when needed Github Actions: Automate your workflow from idea to production. Rich command lines utilities makes performing complex surgeries on DAGs a snap. The Airflow scheduler executes your tasks on an array of workers while following the specified dependencies. Use Airflow to author workflows as directed acyclic graphs (DAGs) of tasks.

AIRFLOW KUBERNETES GITHUB FREE

It is free software, released under the MIT licence, and may be redistributed under the terms specified in LICENSE.Airflow vs Github Actions: What are the differences?Īirflow: A platform to programmaticaly author, schedule and monitor data pipelines, by Airbnb. ContributionĪre welcome, please post issues or PR's if needed. These are better designed for use in kubernetes and are "up with the times". Further, since the logging and parsing of data is done outside of the main worker thread, the worker is "free" do handle other tasks without interruption.įinally, using the KubernetesJobOperator you are free to use other resources like the kubernetes Job to execute your tasks. In this operator, an async threaded approach was taken which reduces resource consumption on the worker. The KubernetesJobOperator allows more advanced features such as multiple resource execution, custom resource executions, creation event and pod log tracking, proper resource deletion (after task is complete or on error, when task is cancelled) and more.įurther, in the KubernetesPodOperator the monitoring between the worker pod and airflow is done by an internal loop which consumes worker resources. secrets Why would this be better than the KubernetesPodOperator? configmaps # Required if reading secrets in the job. jobs # Required for using configmaps in the job Other sidecars to be loaded before the pod completes (this for now is not available for Job),ĪpiVersion: /v1 kind: Role metadata: When executing Pod resources, via yaml, we can define the main container that the status is taken from. kubernetes_legacy_job_operator import KubernetesLegacyJobOperator from airflow. kubernetes_job_operator import KubernetesJobOperator from airflow_kubernetes_job_operator. Contributions welcome.įrom airflow import DAG from airflow_kubernetes_job_operator. We are looking for a solution for it, but have no AWS resources for testing. When running long tasks on AWS the job is activly disconnected from the server (Connection broken: ConnectionResetError, 104, 'Connection reset by peer'), see here and here.replace with the KubernetesPodOperator for legacy support. KubernetesLegacyJobOperator (only airflow 2.0 and up) - Defaults to a kubernetes job definition, and supports the same arguments as the KubernetesPodOperator.

0 kommentar(er)

0 kommentar(er)